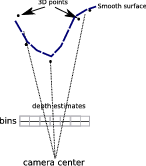

Recently tested out an idea for using a view-dependent Laplacian smoothed depth for rendering. Given a set of 3D points and a camera viewpoint, the objective is to generate a dense surface for rendering. Could either do triangulation in 3D, in the image, or some other interpolation (or surface contouring).

This was a quick test of partitioning the image into bins and using the z-values of the projected points as constraints on a linear system with the Laplacian as smoothness. The result is a view-dependent depth that looks pretty good.

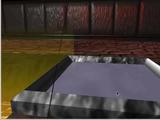

Check out the videos below. The videos show screenshots of two alternatives used for view-dependent texturing in a predictive display setting. Basically, an operator controls a robot (which has a camera on it). There is some delay in the robots response to the operators commands (mostly due to the velocity and acceleration constraints on the real robot). The operator, however, is presented with real-time feedback of the expected position of the camera. This is done by using the most recent geometry and projective texture mapping it with the most recent image. The videos show the real robot view (from PTAM in the foreground) and also the predicted view (that uses the geometric proxy) in the background. Notice the lag in the foreground image. The smooth surface has the a wireframe overlay of the bins used to compute the surface. It works pretty good.

- smooth-surface

- Or an alternative: tet-surface

Currently, the constraints are obtained from the average depth, although it may be more appropriate to use the min depth for the constraints. A slightly more detailed document is available (although it is pretty coarse): lapproxy.pdf

Another improvement would be to introduce some constraints over time (this could ensure that the motion of the surface over time is slow).