Archive for category Projects

Image-Based Face Tracking for VR

Posted by in Projects on August 10th, 2008

Not so much of a research topic as it was an attempt to duplicate some YouTube video that did Head Tracking for VR using a WiiMote (This wasn’t just some YouTube Video, but actually quite a popular one.)

There is a company seeingmachines that has a commercial version of a head tracker that they use for a similar purpose.

The non-pattern based one uses a 4DOF SSD tracker. I’m currently working on a port of the demo for the Mac.

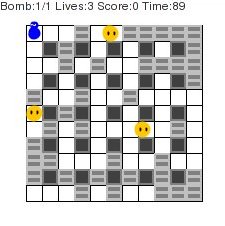

Bomerman 4k

Posted by in Projects on August 7th, 2008

Yep, looks pretty crappy. It isn’t that easy to make a game fit into 4096 bytes. I thought it was easier, but once you set up a region to draw and get your keyboard input you really don’t have much space to work with. Not to mention the overhead from extra files in the jar-file nor the extra space taken by having multiple classes.

There are some real contenders for these types of games up at Java unlimited, which hosts a 4k game contest.

|

Executable (bomb.jar) |

|

Source Code |

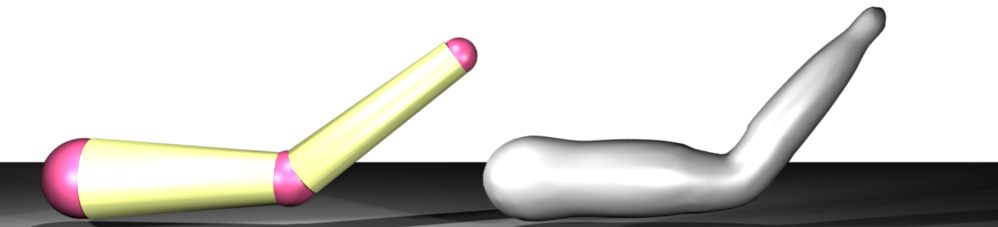

Phd Progress

Posted by in Projects on August 7th, 2008

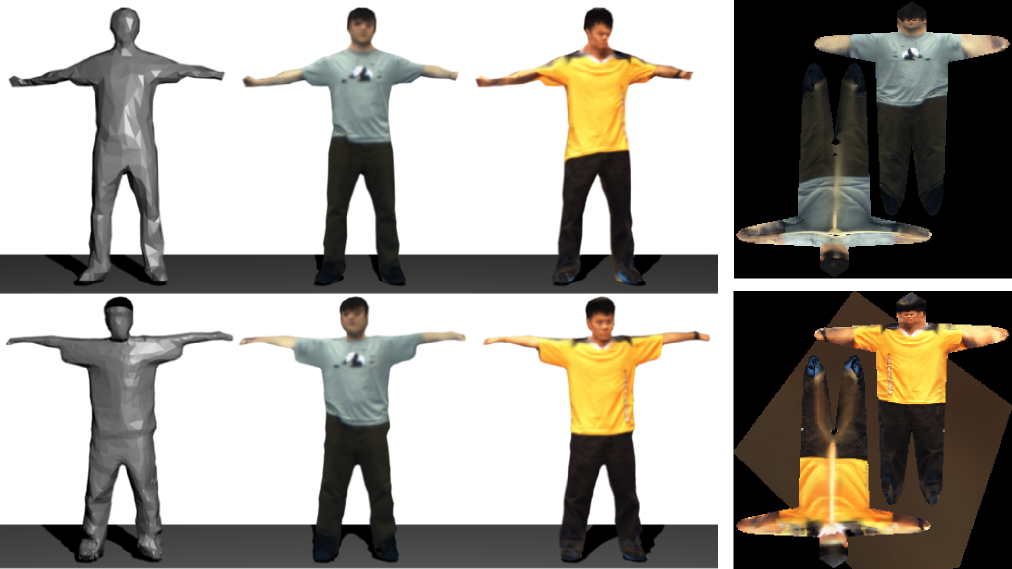

Vision-based Modeling of dynamic Appearance and Deformation of Human Actors

|

|

Abstract:

The goal of the proposed research is to capture a concise representation of

human deformation and appearance in a low-cost environment that can be seamlessly integrated

into entertainment and Virtual Reality applications–applications that often require

relighting, high levels of realism, and rendering from novel viewpoints. Geometric modeling

techniques exist in the current state of the field, but they focus solely on

geometric deformation and ignore the appearance component. I propose

a combined model of both geometric and appearance deformation, leading

to a more compact representation while still achieving high levels of realism.

Furthermore, many existing methods require input data from artists or expensive

Laser scanners. The proposed vision-based method is less expensive

and requires only limited manual intervention.In a controlled environment, several cameras will be used to acquire a dense

static geometry and a basic appearance model of a street-clothed human (e.g., a texture mapped model).

A skeletal model is manually aligned with the existing geometry,

and the actor performs a sequence of predefined motions. Multi-image vision-based

tracking techniques are used to extract the kinematic motion parameters that account for the

gross motion of the object. Multi-view stereo and silhouette cues, and temporal

coherence are used to extract the time-varying residual geometric deformation. These time-dependent

geometric quantities, and the time and view-dependent photometric quantities are used to create

the final compact model of appearance and geometric variation. Following several graphics techniques

for modeling articulated deformation and leveraging existing tools for image-based rendering,

the deformation model is built on top of linear blend skinning. Appearance and geometric variation

are modeled together as a function of abstract interpolating parameters

that include, e.g., relative joint angles and view angles. Examples of deformation include muscle bulging

and some forms of cloth budging. We model this compactly using a dimensionality

reduction and sparse data interpolation, where several low-dimensional subspaces and corresponding

interpolation spaces are used, effectively clustering

portions of the surface that are affected by the same abstract interpolators. Contrast to many of the

vision-based human modeling techniques, our complete model can be re-rendered under novel viewpoint,

novel animation, and even novel illumination when the illumination during capture is calibratedThe specified model also has potential applications in the use of appearance and

deformation transfer between different subjects. Furthermore, the combined geometric and appearance

model will almost directly transfer to similar domains, such as modeling hand deformations, or

related domains, as facial deformations. An improved model of geometry and appearance could also

be used to improve markerless vision-based motion capture. This work also hopes to better identify the

range of clothing and deformations that can be both captured and satisfactory modeled as a function

of relative joint angles neighboring rigid body velocities and other related external factors, such as direction

of gravity, and wind.

Documents

The third version of candidacy report is now available. The document is currently

a work in progress and should not be redistributed candidacy.pdf (Updated: Wed Apr 18 12:01)

Update: I passed my candidacy, more relevant details to the exam are on a separate page

Media

Multi-view tracking preliminary results.

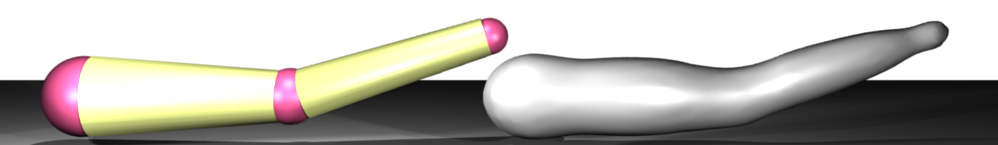

Testing of collision bounding representation

ben_collision.avi

levset

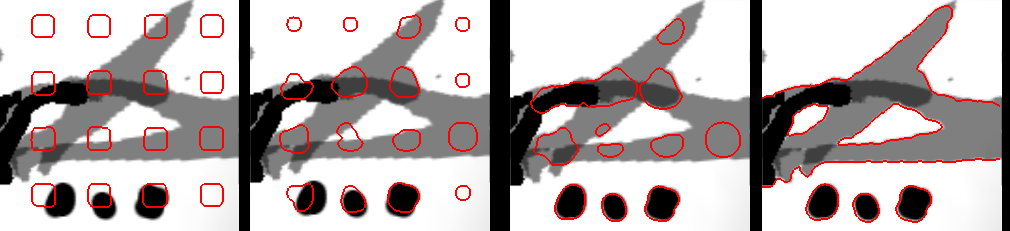

Posted by in Projects on July 20th, 2008

This library contains classes that implement 2D/3D levelsets (full and narrow-band).

Features:

- explicit and implicit schemes for basic evolution equations.

- marching squares/cubes to obtain a surface.

- fast marching method (FMM) for re-initialization

- loading/saving data

There are also SIP python bindings, making it easy to prototype a solution. For example, to perform chen-vese segmentation, you would define a function that defines the evolution:

# evolution function, returns how much to move and maximum time step.

def fun(levset, image, stats, x, y):

l, d, k = levset(x,y), dirac(levset[x,y],2.0), levset.getCurvature(x,y)

pix = (image.pixel(x,y)&0xFF)/255.0

pin, pout = (pix-stats[0])**2, (pix-stats[1])**2

upd = d*(ak*k+(pin-pout))

return (upd, 0.98/(math.fabs(d*(2.0*ak/(xsp*xsp)+2.0*ak/(ysp*ysp))) + 0.01))

And then you would interleave this function call (bound with extra parameters using a lambda function), with the levelset motion and re-initialization calls

func = lambda levset,x,y: fun(levset, self.image, stats, x, y)

while not done:

stats = computeStats()

for i in xrange(0,3):

levset.moveWithCurvature(func)

levset.ensureSignedDistanceFMM()

#...

nmath

Posted by in Projects on July 20th, 2008

Basic math and vector routines. Features:

- Special classes for common vector/matrix sizes. General matrix class with interface to lapack for numerical routines.

- Minpack for non-linear least squares.

- Smart pointered data; all copies are shallow.

- Python interface.

nimage

Posted by in Projects on July 20th, 2008

Basic c++ image library. Features:

- Templated image classes (integer, floating)

- Smart-pointered; copies are all shallow.

- Plugins for input/output (jpeg, png, movies)

- Basic filtering operations (signed distance function).

- Python wrappers.

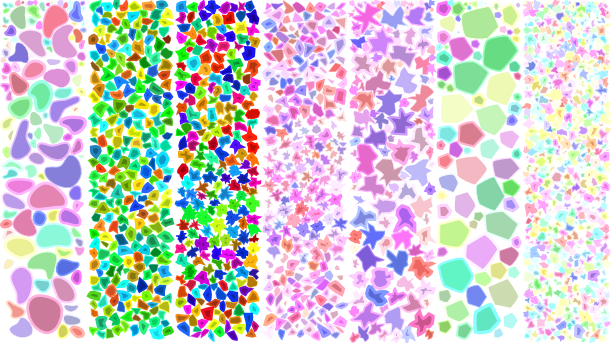

JShape

Capture System

Posted by in Projects on November 30th, -0001

The original capture system project. This started as a capture system written by Keith and Myself in matlab. About a year later (2003/2004) I wrote a c++ version with wxWidgets.

It turned out to be very useful for several other projects.

Features:

- Cross-platform

- Plugin style interface (very simple)

- 1394 capture or load from images

- Internal tools for computing texture coordinates

Other links:

GT Racer

Posted by in Projects on November 30th, -0001

GT Racer demo, openGL.

I finally found the time to do some updates and get a windows binary available. The zip file is large (mostly due to uncompressed sound and models). There are lots of things that still need fixing:

- Graphics

- Multiplayer

- A couple more little things to make single player complete

- A lot of code fixing

Oh well. At least it is up here in case anyone wants to test it out.

The controls are ‘w’ for push (currently you can push as much as you want), middle button for controlling front ski, and flicks of the right button for backflips and spinning. There are some keys bound to the tricks (I think ‘e’ ‘f’, ‘z’ and ‘c’, or ‘q’ or something). ‘a’ and ‘d’ may be bound to dropping flags (my crappy level editor).

Oh yeah, the directory it unpacks to is a huge freakin’ mess, and the program crashes when you close the window. All in good time my friend…

M.Sc. Thesis

Posted by in Projects on November 30th, -0001

In my M.Sc. Project I studied methods for recovering the shape and reflectance of objects from image sets in indoor environments

Masters Thesis

My masters thesis work is focused on the capture of 3D models and reflectance properties from images. One of the main goals of this work was to produce a 3D geometry and texture map that was easy to use in typical graphics applications. We emphasize that obtaining a true geometry overcomes some of the limitations of Image Based representations, in that the models can be rendered under novel lighting, and novel viewpoints. Since a mesh representation is common in graphics applications, our models can easily be merged with other models and modified (ie. animated) by artists.

A pdf of the document is available.

Below are some results and applications of this work.

Results

Animated GIF of the initial visual hull and the refined results of the saddog sequence.

Two of the input images (top), followed by the ground truth (2nd row), the visual hull reconstruction (3nd row), and the reconstructed results (bottom).

Two of the input images (top), followed by the initial model (2nd row), a shaded model of the refined results (3rd row), and a texture mapped model (bottom).

The first column is an imput image, followed by a shaded rendering of the recovered model, a rendering of the diffuse texture lit under the same lighting, a rendering of the diffuse + specular texture, and a rendering with novel lighting and shadows.

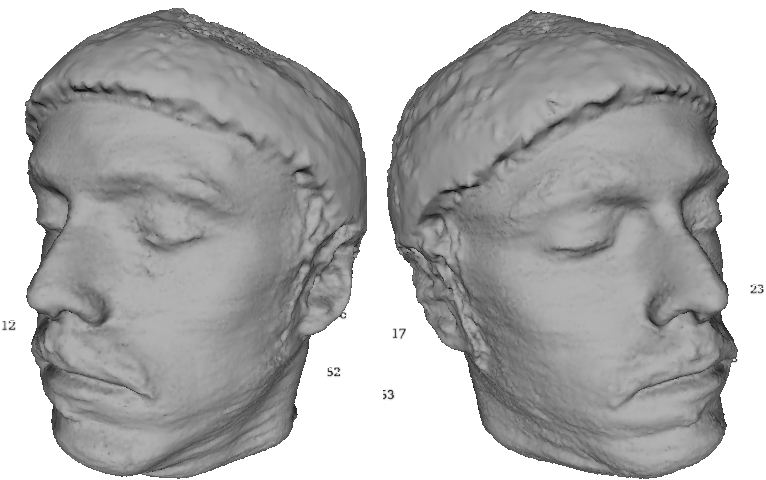

Some recent results (Aug 18th) on my head. The untextured version shows a higher res version than the textured version. Only the Lambertian component is shown in the texture.

Some results of a failed attempt are also available: shaded and textured. In this case there were many missing images from the side view, and one side was not reconstructed very well.

Videos of the optimization are also available:

Windows users will need to have the divx codec installed to play the files. The codec is available as a free download from www.divx.com.

A number of the objects, as well as a downloadable viewer can be obtained on the Models page.

Applications

As one of the main applications is computer graphics/entertainment, the objects can easily be merged with other objects (hand-modeled or captured), and rendered under novel viewpoints and lighting. Below is an example of a rendering made in blender. There are several novel lights, a synthetic sphere, and the elephants legs and trunk have been animated.

Another application we have implemented is a Chess game. Each of the characters in the game were actually modeled using the method described in this thesis. Windows executable requires cygwin1.dll available at www.cygwin.com

Gimp – Integrate Normals

Posted by in Projects on November 30th, -0001

This plugin is used to integrate normal maps into depth maps. As input the plugin takes an rgb description of a normal map, and outputs the corresponding depth map. There are two modes of operation.

More details and screenshots to come.

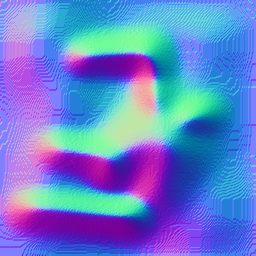

As an example, the program can be used to create normal maps from depth maps, or more importantly depth maps from normal maps.

To illustrate, consider the input depth map:

In some vision problems (e.g., shape from shading), you have a normal map and you want to recover the depth map. The plugin can be used to generate the depth map from the normal map(left). Running the plugin once more (with this typical input), we can recover the depth:

The interface has several modes for packing either the normals or the depth, but if you want to use them, you will need to look at the code. Requires the fast fourier transform library fftw3 to compile and run. Using a sparse linear least squares solver, lsqr, which is included in the source.

tetris08

Posted by in Projects on November 30th, -0001

Back in 2004, Keith Yerex and I played a game of who can write tetris the fastest on a flight from Toronto to Edmonton.

I dont remember who won, and I dont have the source anymore, but it was a good way to kill time.

On a recent trip to Atlanta for a conference, I played a solitaire version of write tetris fast; this is the result. I spent some more time during the evenings on that trip adding some crude AI that takes the history into account.

Executables and source to come.

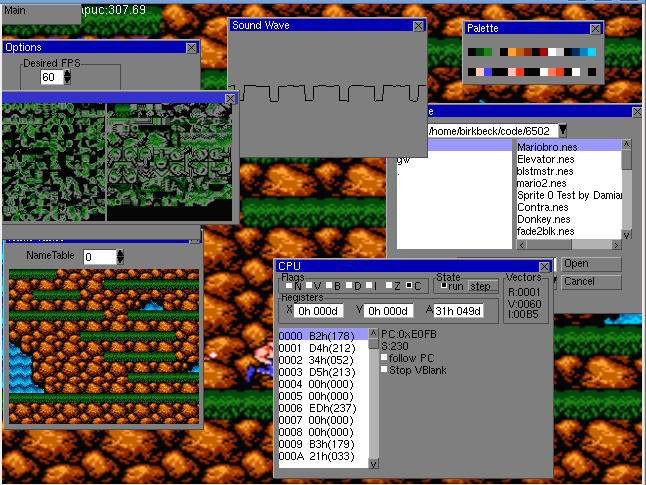

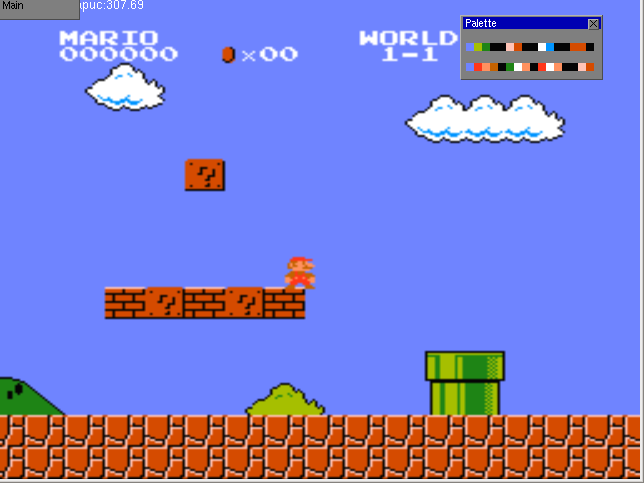

Nestacy (NES Emulator)

Posted by in Projects on November 30th, -0001

History

Shortly after graduating (or perhaps at the end of my third year of undergrad) I started work on a NES emulator. It was a good testbed to test out all of the things that I had learnt.

The emulator would of course not have been possible without the immense amount of detail about the internals of the NES available online (I believe most of it is on this site someplace: http://nesdev.parodius.com/).

I started with creating an emulator for the 6502. Some of the results of the opcodes were a bit unclear, and I am sure that my emulator had a few bugs. I think there were some test programs to try out the emulator. The next component was the PPU, and it didn’t take long before I was able to get some crude graphics up. Of course, during this time I had to create a set of utilities to draw debug information for both the CPU and PPU (this became my first windowing toolkit: GWindows). There were still some bugs in the 6502 at that time, but there was a NES rom that tested all of the opcodes (and some of their peculiarities on certain page boundaries) and displayed the results visually. This allowed me to finalize the 6502 core. After that came adding some memory mappers for loading various ROMs. It wasn’t until later on that I added sound, support.

The emulator is still incomplete and doesn’t load all of the available ROMs. There was one bug, however, that I never was able to get rid of. It had to do with the sprite-1 detection and timing. The bug is visible in Super Mario Bro’s, where the sprite1 detect feature is used to switch name tables or something (the coin at the top). In the end it causes the scrolling of the top HUD to be offset incorrectly horizontally (like an erroneous VSync on a TV). I am happy to leave it as one of those bugs that I will never find.

The code is a bit of a mess so I didn’t post it. If someone is actually interested, I would be happy to provide it.

GWindows

The windowing toolkit was also a first for me at the time. There was support for numerous widgets, a file open dialog, and easily subclassable windows. I did end up using this windowing toolkit for other projects (at first the YUV capture system renderer) but never finalized the API.

Screenshots