Posts Tagged research

CameraCal

This is a camera calibration tool inspired by the toolbox for matlab. Give it a few checkerboard images, select some corners, and you get internal calibration parameters for your images.

Features:

- Output to xml/clb file

- Radial distortion

Windows binaries (source code) to come.

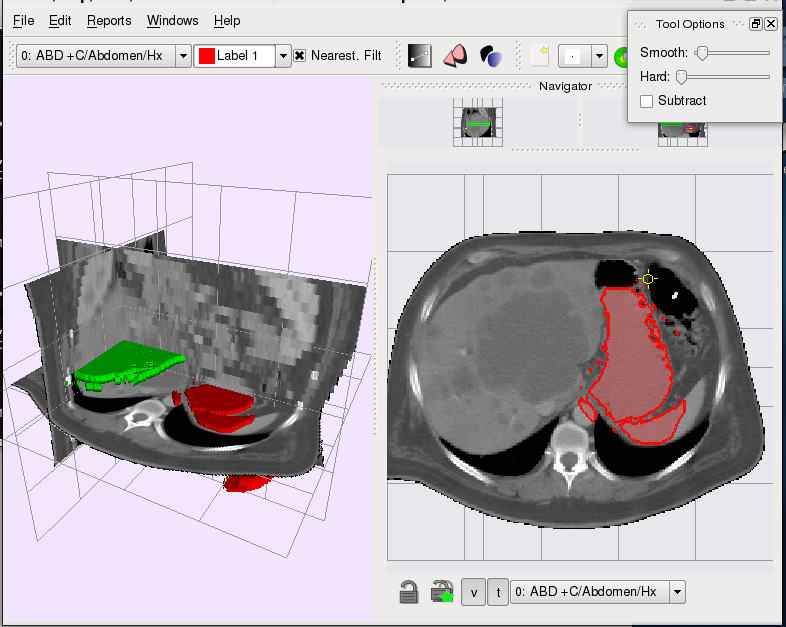

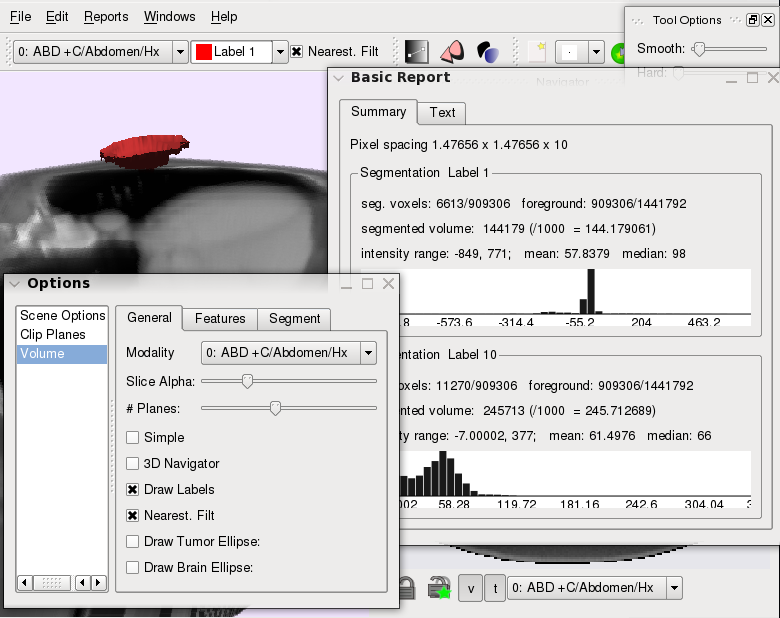

Segmentation Tool

Posted by in Projects on April 22nd, 2009

Overview

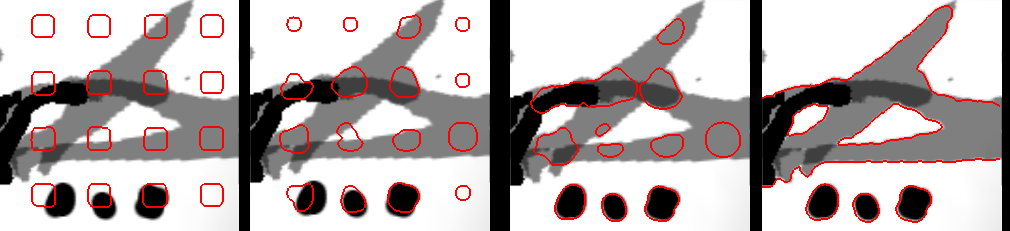

The segmentation tool started as a simple 3D segmentation

viewer with crude interaction to inspect the results of

an automatic level-set variational segmentation. Since then

several semi-automatic intelligent tools have been incorported

into the viewer. These tools take the region statistics from a

user selection and try to intellgently dileneate the boundary

of the users selection; these allow a user to roughly specify the

region of interest (be it some anatomical part, or a tumor),

while the software takes care of extracting the boundary.

The tool is currently in a beta stage, but has been used in our

lab to perform segmentations for several research projects. Possibly,

in the future, it will be open-sourced.

Features

- Multiple segmentation layers

- Semi-automatic interactive tools (graph-cut based)

- Different region statistics modes (histograms, independent histograms, mean)

- Slice-locking fixes segmentation on a slice; also ensure 3D automatic tools do not interfere with user segmentations

- Plugin-based interaction tools

- 3D-view, multiple 2D views (with different image modalities).

- Dicom loading (using gdcm library)

- Cross-platform (Linux/Windows, probably Mac)

Screenshots

Contributors

Neil Birkbeck, Howard Chung, Dana Cobzas.

Proving Ground

The tool also serves as a proving ground for other semi-automatic segmentations. In an attempt to improve

the segmentation when a prior shape was known (for example, a mean shape from previous segmentations), an extension was developed to perform an optimization over shape deformation (rigid transformations + non-rigid transformations) while keeping the resulting segmentation close to the mean shape. Below are some of the videos related to the implementation of this idea (a short presentation, with no references, is also available: levprior.pdf).

The video links illustrate a selection (which mimicks the mean shape), followed by the optimization of the segmentation (hardness_mid.mp4/ hardness_mid.flv, varying_hardness.mp4/ varying_hardness.flv). The hardness parameter determines how close the segmentation is to the mean shape. The interp video ( interp.mp4/ interp.flv) shows how a single mean shape can carry over to different slices. Ideally this would be done in 3D with a fixed 3D mean shape. Finally, with this model it is easy to introduce more user constraints in the form of point constraints (point_cons.mp4/point_cons.flv).

Passive Walker

Posted by in Projects on March 28th, 2009

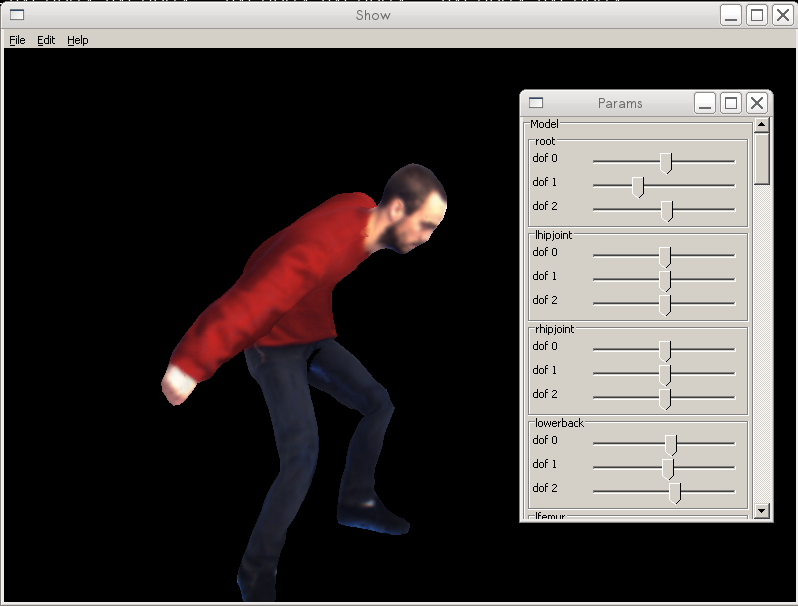

In the past week (late March 2009), I kept coming across the same paper (by Brubaker et al.) that used an anthropometric passive walker for a prior in people tracking. I had read the paper in the past, but this time I couldn’t contain the urge to implement some of the ideas. This is the result of that impulse.

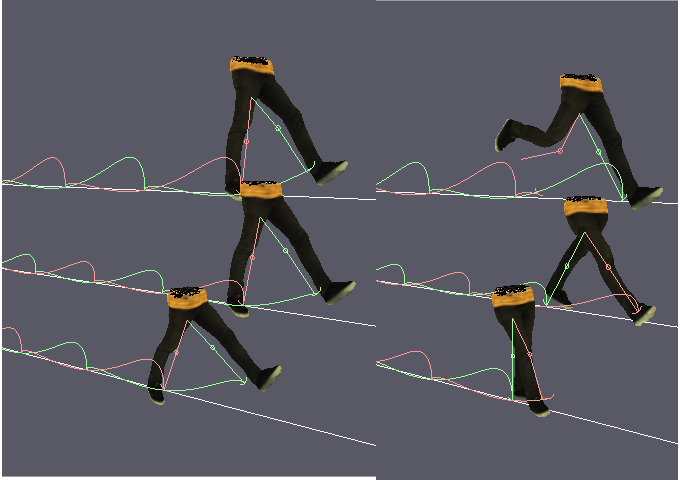

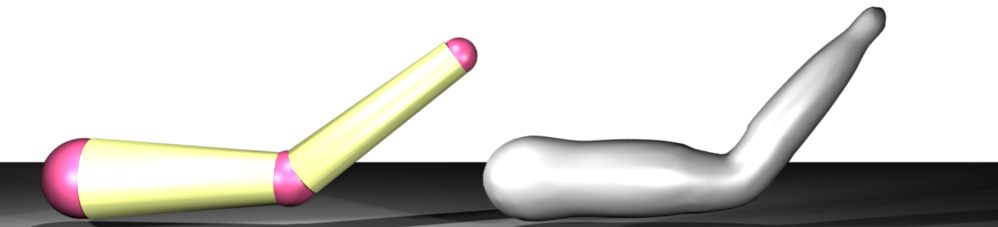

The passive walker contains a spring in between the hips and has a parameter for the impulse recieved on collision. From these two parameters you can generate different styles of walking; you end up with the ability to generate cyclic gaits for different speeds and stride lengths. The walker only has two straight legs, but Brubaker suggests a method to map this to a kinematic structure. The screenshot below shows several walkers attached to a kinematic structure and skinned mesh.anim

It is better illustrated in the movie, in which I am controlling the speed/step size of the gait by the mouse movements:

The movies (passive.mp4, passive.flv)

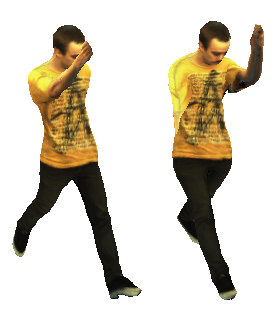

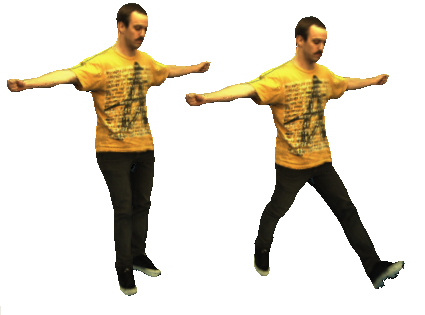

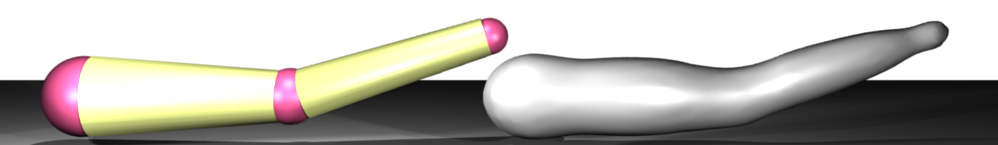

I spent about an hour throwing together something that tried to pose the rest of a mesh using the walk cycle. The idea was to use a training set of poses (from motion capture data), and pick poses for the upper body from the training sequence based on the orientation fo the lower joints. I’m sure it could be done better. Below are some examples: the left hand side shows some poor resutlts (due to overconstrained joint angles); the right side shows unposed upper body. Some of these sequences are avaibale to use (anims) with the viewer on the wxshow page.

A document containing some of my implementation details:passive.pdf

ispace viewer (inm)

Posted by in Projects on March 9th, 2009

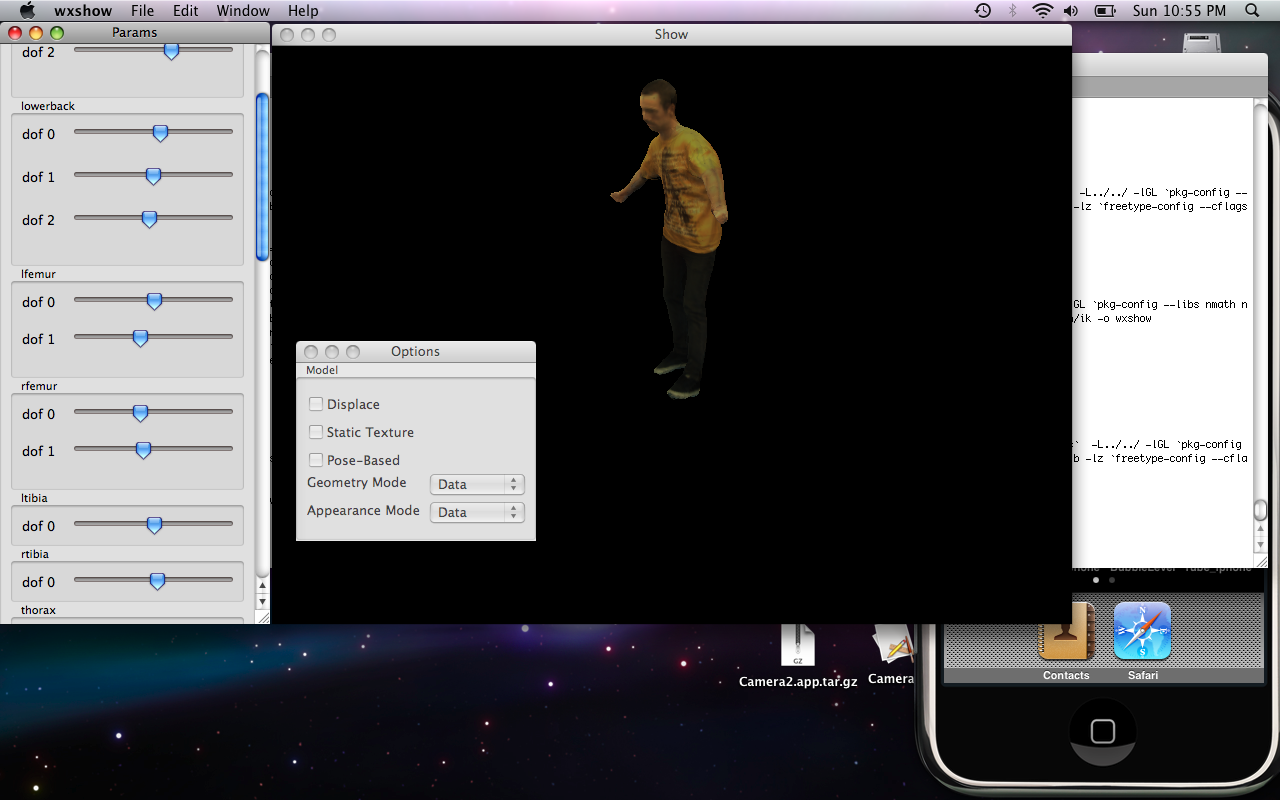

This is a cross-platform viewer for inspecting and playing with the parameters of my shape/appearance interpolation files (inm).

I originally created this to allow viewing of the models that I create in my research. It is in a preliminary state, but can currently be used to render captured animations on a model, while demonstrating geometry deformations and view-dependent appearance.

Files (let me know if there are any problems):

Models:

Status:

- This is an Alpha release (Mar 9)

Problems:

- Crash on exit (Mac)

- MIME types (Mac/Windows)

- Status-bar for loading

- Temporary file cleanup

Phd Progress

Posted by in Projects on August 7th, 2008

Vision-based Modeling of dynamic Appearance and Deformation of Human Actors

|

|

Abstract:

The goal of the proposed research is to capture a concise representation of

human deformation and appearance in a low-cost environment that can be seamlessly integrated

into entertainment and Virtual Reality applications–applications that often require

relighting, high levels of realism, and rendering from novel viewpoints. Geometric modeling

techniques exist in the current state of the field, but they focus solely on

geometric deformation and ignore the appearance component. I propose

a combined model of both geometric and appearance deformation, leading

to a more compact representation while still achieving high levels of realism.

Furthermore, many existing methods require input data from artists or expensive

Laser scanners. The proposed vision-based method is less expensive

and requires only limited manual intervention.In a controlled environment, several cameras will be used to acquire a dense

static geometry and a basic appearance model of a street-clothed human (e.g., a texture mapped model).

A skeletal model is manually aligned with the existing geometry,

and the actor performs a sequence of predefined motions. Multi-image vision-based

tracking techniques are used to extract the kinematic motion parameters that account for the

gross motion of the object. Multi-view stereo and silhouette cues, and temporal

coherence are used to extract the time-varying residual geometric deformation. These time-dependent

geometric quantities, and the time and view-dependent photometric quantities are used to create

the final compact model of appearance and geometric variation. Following several graphics techniques

for modeling articulated deformation and leveraging existing tools for image-based rendering,

the deformation model is built on top of linear blend skinning. Appearance and geometric variation

are modeled together as a function of abstract interpolating parameters

that include, e.g., relative joint angles and view angles. Examples of deformation include muscle bulging

and some forms of cloth budging. We model this compactly using a dimensionality

reduction and sparse data interpolation, where several low-dimensional subspaces and corresponding

interpolation spaces are used, effectively clustering

portions of the surface that are affected by the same abstract interpolators. Contrast to many of the

vision-based human modeling techniques, our complete model can be re-rendered under novel viewpoint,

novel animation, and even novel illumination when the illumination during capture is calibratedThe specified model also has potential applications in the use of appearance and

deformation transfer between different subjects. Furthermore, the combined geometric and appearance

model will almost directly transfer to similar domains, such as modeling hand deformations, or

related domains, as facial deformations. An improved model of geometry and appearance could also

be used to improve markerless vision-based motion capture. This work also hopes to better identify the

range of clothing and deformations that can be both captured and satisfactory modeled as a function

of relative joint angles neighboring rigid body velocities and other related external factors, such as direction

of gravity, and wind.

Documents

The third version of candidacy report is now available. The document is currently

a work in progress and should not be redistributed candidacy.pdf (Updated: Wed Apr 18 12:01)

Update: I passed my candidacy, more relevant details to the exam are on a separate page

Media

Multi-view tracking preliminary results.

Testing of collision bounding representation

ben_collision.avi

levset

Posted by in Projects on July 20th, 2008

This library contains classes that implement 2D/3D levelsets (full and narrow-band).

Features:

- explicit and implicit schemes for basic evolution equations.

- marching squares/cubes to obtain a surface.

- fast marching method (FMM) for re-initialization

- loading/saving data

There are also SIP python bindings, making it easy to prototype a solution. For example, to perform chen-vese segmentation, you would define a function that defines the evolution:

# evolution function, returns how much to move and maximum time step.

def fun(levset, image, stats, x, y):

l, d, k = levset(x,y), dirac(levset[x,y],2.0), levset.getCurvature(x,y)

pix = (image.pixel(x,y)&0xFF)/255.0

pin, pout = (pix-stats[0])**2, (pix-stats[1])**2

upd = d*(ak*k+(pin-pout))

return (upd, 0.98/(math.fabs(d*(2.0*ak/(xsp*xsp)+2.0*ak/(ysp*ysp))) + 0.01))

And then you would interleave this function call (bound with extra parameters using a lambda function), with the levelset motion and re-initialization calls

func = lambda levset,x,y: fun(levset, self.image, stats, x, y)

while not done:

stats = computeStats()

for i in xrange(0,3):

levset.moveWithCurvature(func)

levset.ensureSignedDistanceFMM()

#...

M.Sc. Thesis

Posted by in Projects on November 30th, -0001

In my M.Sc. Project I studied methods for recovering the shape and reflectance of objects from image sets in indoor environments

Masters Thesis

My masters thesis work is focused on the capture of 3D models and reflectance properties from images. One of the main goals of this work was to produce a 3D geometry and texture map that was easy to use in typical graphics applications. We emphasize that obtaining a true geometry overcomes some of the limitations of Image Based representations, in that the models can be rendered under novel lighting, and novel viewpoints. Since a mesh representation is common in graphics applications, our models can easily be merged with other models and modified (ie. animated) by artists.

A pdf of the document is available.

Below are some results and applications of this work.

Results

Animated GIF of the initial visual hull and the refined results of the saddog sequence.

Two of the input images (top), followed by the ground truth (2nd row), the visual hull reconstruction (3nd row), and the reconstructed results (bottom).

Two of the input images (top), followed by the initial model (2nd row), a shaded model of the refined results (3rd row), and a texture mapped model (bottom).

The first column is an imput image, followed by a shaded rendering of the recovered model, a rendering of the diffuse texture lit under the same lighting, a rendering of the diffuse + specular texture, and a rendering with novel lighting and shadows.

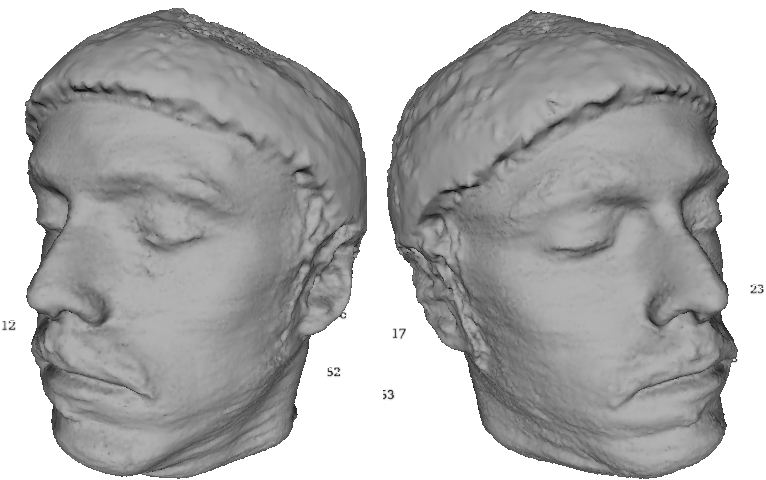

Some recent results (Aug 18th) on my head. The untextured version shows a higher res version than the textured version. Only the Lambertian component is shown in the texture.

Some results of a failed attempt are also available: shaded and textured. In this case there were many missing images from the side view, and one side was not reconstructed very well.

Videos of the optimization are also available:

Windows users will need to have the divx codec installed to play the files. The codec is available as a free download from www.divx.com.

A number of the objects, as well as a downloadable viewer can be obtained on the Models page.

Applications

As one of the main applications is computer graphics/entertainment, the objects can easily be merged with other objects (hand-modeled or captured), and rendered under novel viewpoints and lighting. Below is an example of a rendering made in blender. There are several novel lights, a synthetic sphere, and the elephants legs and trunk have been animated.

Another application we have implemented is a Chess game. Each of the characters in the game were actually modeled using the method described in this thesis. Windows executable requires cygwin1.dll available at www.cygwin.com

Capture System

Posted by in Projects on November 30th, -0001

The original capture system project. This started as a capture system written by Keith and Myself in matlab. About a year later (2003/2004) I wrote a c++ version with wxWidgets.

It turned out to be very useful for several other projects.

Features:

- Cross-platform

- Plugin style interface (very simple)

- 1394 capture or load from images

- Internal tools for computing texture coordinates

Other links: