About mid September I wanted to create a synthetic data-set of wrinkle motion from real data. The synthetic data set had some constraints, such that the motion should be smooth and the cloth should not be too loose and wavy. I figured that I would just capture a sequence of real deforming clothing and then map a mesh onto the motion of the data. I realized that I was going to have to write some code to do this (and I also wasn’t afraid to specify plenty of manual correspondences). The input sequence looked roughly like below (captured from 4 cameras).

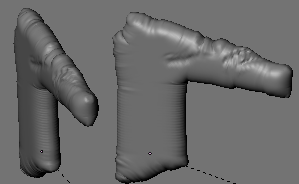

I figured I would use a combination of user (and by user I mean me) constraints and possibly photometric information (e.g., silhouettes, and image intensities) to align a template model to the images. But first, I needed a template model. For this, I captured a front and back view of the shirt I was wearing, and created a flat polygonal model by clicking on the boundaries of the shirt in Blender. In this way it is straightforward to get a distortion free uv-parameters. The next step was to create a bulged model. It turns out some of the garment capture literature already does something similar. The technique is based on blowing up the model in the normal direction, while ensuring that the faces do not stretch (or compress) too much. The details of avoiding stretch are given by the interactive plush design tool Plushie.

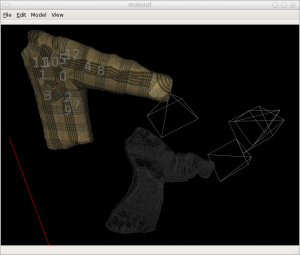

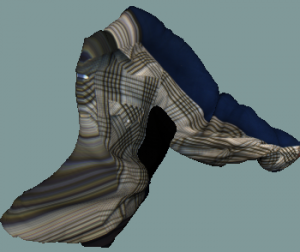

As for mapping this model to the images, I had previously written some code to manaully make models from images (by clicking on corresponding points in multiple images). I figured that something like this would be sufficient for aligning the template model. In the UI, you click on points in multiple images, and the corresponding 3D point is triangulated. These points are then associated with the corresponding point on the mesh (again manually). Finally, some optimization that minimizes deformation of the clothing and fits the points would be necessary. Originally, my intentions were to just try this for a couple frames individually, and later try to do some temporal refinement.

For the optimization, my first thought was just to use the Laplacian mesh deformation framework, but a naive implementation is rotational variant and doesn’t work well. After ICCV I noticed that the “As rigid as possible deformation” framework (which is similar to the Laplacian coordinates) would work perfect for this. I plugged in the code, and quickly realized that I was going to need a large number of constraints, more than I had originally thought would be necessary.

One of the biggest problems was that when I wore the shirt the arm top of the template model did not necessarily match the top of my arm, meaning that parts of the template model that were frontfacing in the template were not observed. This means that there was no easy way to constrain them to be behind (I only had camera views of the front). Some silhouette constraints would help this problem. The other problem (which may be due to the shirt) was that it is really hard to determine correspondences between the input and the mesh when the sleeve has twisted. I think I have to give up on this approach for now. I am considering using either animated cloth data (which in Blender seems to be too dynamic, and doesn’t exhibit wrinkles). Another method may be to manually introduce wrinkles into a synthetic model (I have just finished reading some papers on this and have a couple of ideas). The third method would be to use some color coded pattern to automatically obtain dense correspondences (e.g., google “garment motion capture using color-coded patterns”, or go here)

The last one is obviously too much work for what I actually want to do with it, but it is tempting.

Clicking interface (left) and initial/mapped model (right)