Posts Tagged opengl

wtWidgets

Overview

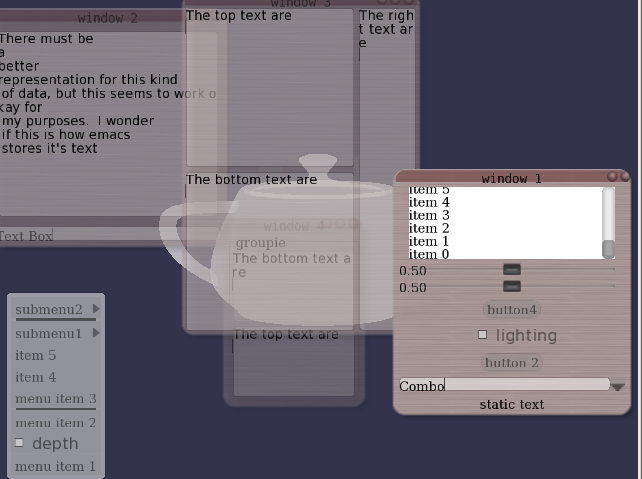

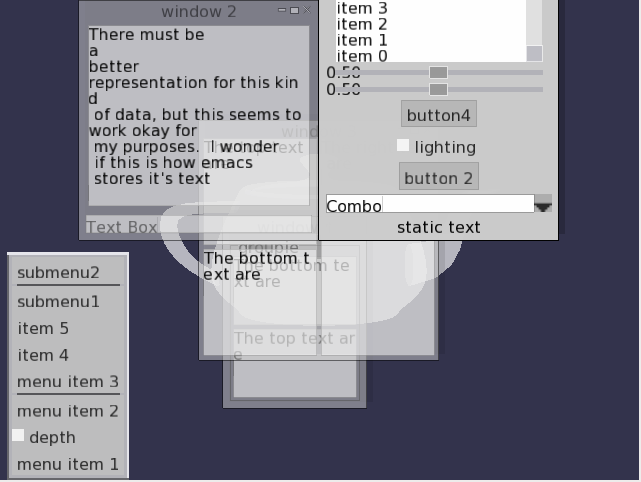

This was my second attempt at a OpenGL UI toolkit (the first one being GWindows, used in the NES emulator). When I started, I was most concerned with adding animations, and how to interface with UI events. The events were handled using boost signals and slots, which is (in my opinion) the perfect way to implement UI events. The toolkit has customizable widgets (allowing for different fonts, backgrounds, and styles), as well as hooks for allowing animations to be attached. The toolkit has the typical windowing elements: windows, buttons (regular and check), menus, sliders, group boxes, layout managers, split panes, combo boxes, and text edits.

Most of the feel (of look and feel) is established by signals and slots. For example, upon creation a widget emits a look and feel signal, which allows for any slots (e.g., sounds or animations) to be attached to the widgets various UI signals (e.g., button pressed, window exiting, etc.). Backgrounds are controlled with textures and programmable shaders (old-school fragment programs that is).

Unfortunately, I wasn’t super concerned with usability, so laying out new widgets has to be done in code (and it is a little painful). The XML schema for styles could also use some work.

I have only used this code in a couple of projects. It is used in part of my Ph.D project for tracking humans (and one of the demos), and it is also coarsely integrated into the GT demo.

Styles

Here are a couple sample styles (follow links to view the movies: plain.flv style1.flv style2.flv).

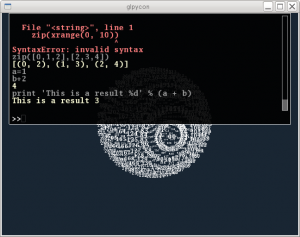

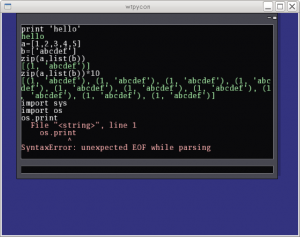

Part of the UI was developed in conjunction with a UI inferface to python (I call it PyCon; I realize this is also the name of a conference). The console allows commands to be issued from a command line prompt from within your program. All you have to do to use it is create the corresponding python wrappers for your classes (e.g., using boost::python, swig, sip, or whatever other tool you prefer. The low-level python console interface allows issuing commands (and auto-completing them). The UI is similar and wraps this low-level interface with a UI for issuing commands, displaying partial autocompleted results, scrolling through output, and remembering previously issued commands. I personally think that with the autocomplete, this is sometimes more useful than the actual python console.

Unfortunately, the python console will not work in windows (as it uses “dup” and “dup2” in order to grab the python interpreter stdin and stdout).

History

Although I can’t remember exactly what possessed me to start this project, I think it had something to do with seeing other nice interfaces (both in GL and for the desktop). One in particular was the original version of Beryl (or Desktop effects in Linux) that included the wobbly windows. I designed my own wobbly windows based on a spring network with cubic polynomial subdivision for rendering (wobbles: avi flv). This all started sometime around 2006, or 2007. See the source code (it may not be pretty: springs.cpp).

(non)-rigid tracking

In the past little while I have been trying to determine a way that dense geometric deformations can be reconstructed and tracked over time from a vision system. The main application is to track dense geometry deformations of a human subject over time so that they can be played back under novel view and animation conditions.

It is assumed there are only a few cameras (with known pose calibration) observing the scene, so it is desirable to take advantage of as much temporal information as possible during the reconstruction. As a simpler starting point for this problem, I felt it appropriate to work on the reconstruction of dense geometry for a moving human head.

Many existing tracking methods start with some known dense geometry, or assume that the geometry can be reconstructed at each time instant. In our case, neither of these assumptions hold, the latter because we have only a few cameras. The general problem is therefore to reconstruct a dense model, and its deformations over time.

A part of the problem is to reconstruct the motion of the rigid parts. This can be done using all available cues, e.g., texture and stereo. And it too can be accomplished with different representations (e.g., a set of points, a mesh, or level-sets). In these notes, I considered this problem alone: given an approximate surface representation for a non-rigid object, find the transformations of the surface over time that best agree with the input images. Keep in mind, however, that these ideas are motivated by the bigger problem, where we would like to refine the surface over time.

In this example, intensity color difference (SSD) tracking was used to track a skinned model observed from several camera views. The implementation is relatively slow (roughly 1 frame a second), but I have also implemented a GPU version that is almost 7 fps (for 12000 points and two bones, one 6.dof base/shoulders and a 3 dof neck, with 4 cameras).

There is a pdf document with more details posted as well.

Some videos are given below.

An example input sequence. The video was captured from 4 synchronized and calibrated cameras positioned around my head.

If we just run a standard stereo algorithm, we will get some noisy results. Ideally we would like to track the head over time and integrate this sort of stereo information in the coordinate frame of the head. This part is hard as the surface is open.

Tracking the head alone would allow the registration of these stereo results in a common coordinate frame, where temporal coherency makes more sense. The video below demonstrates this idea, although I haven’t found a good solution for merging the stereo data.

| Tracked head | Depth registered in coordinate frame of head |

The following videos are more related to the tracking using a skinned mesh and an SSD score. In the following videos the tracked result on the above input sequence using a mesh generated from the first frame is illustrated below. The mesh has two bones: one 6 D.O.F for the shoulders and another for the 3 D.O.F. neck/head.

The tracking currently ignores visibility; this can cause problems when many of the points are not visible. An example of this mode of failure is given in the following sequence. This sequence was captured under similar conditions, although the left-right motion is more dramatic (more vertices are occluded). Additionally, this sequence has some non-rigid motion.

| Input sequence | noisy depth from stereo |

| Tracked head and shoulders. Notice the failure when the head is turned too far to the left. | |

These videos, and the original avi’s are available in the following two directories:

seq0-movies and seq1-movies. You will need vlc, or mplayer, ffmpeg, (maybe divx will work) to view the avi’s.

Code for Rodrigues’ derivatives: roddie. Included as I merged this code into my articulated body joint.