Posts Tagged Tracking

Region-based tracking

I recently had to revisit some tracking from last year. In the process, I uncovered some other relevant works that were doing region-based tracking by essentially segmenting the image at the same time as registering a 3D object. The formulation is similar to Chan-Vese for image segmentation, however, instead of parameterizing the curve or region directly wiht a level-set, the segmentation is parameterized by the 3D pose parameters of a known 3D object. The pose parameters are then found by a gradient descent.

Surprisingly, the formulation is pretty simple. There are two similar derivations:

1) PWP3D: Real-time segmentation and racking of 3D objects, Prisacariu & Reid

2) S. Dambreville, R. Sandhu, A.Yezzi, and A. Tannenbaum. Robust 3D Pose Estimation and Efficient 2D Region-Based Segmentation from a 3D Shape Prior. In Proc. European Conf. on Computer Vision (ECCV), volume 5303, pages 169-182, 2008.

3) There is another one from 2007. Schamltz.

This is similar to aligning a shape to a known silhouette, but in this case the silhouette is estimated at the same time.

The versions are really similar, and I think the gradient in the two versions can be brought into agreement if you use the projected normal as an approximation to the gradient of the signed distance function (you then have to use the fact that = 0). This should actually benefit implementation 1) because you don’t need to compute the signed distance of the projected mesh.

I implemented the version (2), in what took maybe 1 hour late at night, and then a couple more hours of fiddling and testing it the next day. Version 1) claims real-time; my implementation isn’t real-time, but is probably on the order of a frame or 2 a second. The gradient descent seems quite dependent on the step size parameter, which IMO needs to change based on the scene context (size ot object, contrast between foreground and background).

Here are some of the results. In most of the examples, I used a 3D model of a duck. The beginning of the video illustrates that the method can track with a poor starting point. In fact, the geometry is also inaccurate (it comes from shape-from-silhouette, and has some artifacts on the bottom). In spite of this, the tracking is still pretty solid, although it seems more sensitive to rotation (not sure if this is just due to the parameterization).

Here are 4 videos illustrating the tracking (mostly on the same object). The last one is of a skinned geometry (probably could have gotten a better example, but it was late, and this was just for illustration anyhow).

http://www.youtube.com/watch?v=ffxXYGXEPOQ

http://www.youtube.com/watch?v=ygn5aY8L-wQ

http://www.youtube.com/watch?v=__3-QB_7jhM

http://www.youtube.com/watch?v=4lgemcZR87E

Linked-flow for long range correspondences

This last week, I thought I could use linked optic flow to obtain long range correspondences for part of my project. In fact, this simple method of linking pairwise flow works pretty well. The idea is to compute pairwise optic flow and link the tracks over time. Tracks that have too much forward-backward error (the method is similar to what is used in this paper, “Object segmentation by long term analysis of point trajectories”, Brox and Malik).

I didn’t end up using it for anything, but I wanted to at least put it up the web. In my implementation I used a grid to initialize the tracks and only kept tracks that had pairwise frame motion greater than a threshold, that were backward forward consistent (up to a threshold), and that were consistent for enough frames (increasing this threshold gives less tracks). New tracks can be added at any frame when there are no tracks close enough. The nice thing about this is that tracks can be added in texture-less region. In the end, the results are similar to what you would expect from the “Particle Video” method.

Here are a few screenshots on two scenes (rigid and non-rigid).

But it is better to look at the video:

Iterative mesh deformation tracking

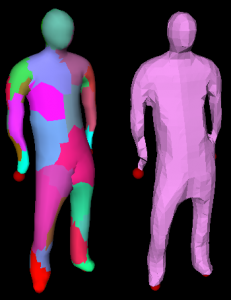

Another idea that interested me from ICCV (or 3DIM actually) was related to tracking deforming geometry, using only raw geometry no color. The paper in particular ( Iterative Mesh Deformation for Dense Surface Tracking, Cagniart et al.) didn’t require even geometric feature extraction, but rather relied on a non-rigid deformation scheme that was similar to iterated closest points (ICP). The only assumption is an input geometry that has the same topology of what you would like to track. As for the ICP component, instead of doing this for the entire model, the target mesh contributes a displacement (proportional to cosine between normals) to its closest vertex on the deforming source mesh. The source mesh is then partitioned into a set of patches, and each patch tries to compute a transformation that satisfies the point-wise constraints. The positional constraint on the patch center is then used in a Laplacian (or more specifically, the As-Rigid-As-Possible (ARAP) Surface deformation framework) to deform the geometry. This is then iterated, and a new partitioning is used at each frame. To ensure some more rigidity of the model, the positional constraint on the patch center is influenced by it’s neighboring patches, as well as by the transformation of the original source mesh. Look at the paper for full details.

I needed the ARAP for some other project (see previous blog), and decided to go ahead and try to implement this (I think it was actually last Friday that I was playing around with it). Anyhow, this week I knew that I had to test it on some more realistic data; my first tests were of tracking perturbations of the same geometry (or of a deforming geometry with the same topology). To generate some synthetic data, I rendered 14 viewpoints of a synthetic human subject performing a walking motion (the motion is actually a drunk walk from the CMU motion capture database). For each time frame, I computed the visual hull and used the initial geometry to try and track the deforming geometry. This gives a consistent cross-parameterization of the meshes over the entire sequence.

After tweaking some of the parameters (e.g., the number of iterations, and the amount of rigidity, the number of patches), I was able to get some decent results. See some of the attached videos.

Links to movies (if the above didn’t work):

Source blender file (to create the sequence, some of the scripts I use often are in the text file): person-final-small

(non)-rigid tracking

In the past little while I have been trying to determine a way that dense geometric deformations can be reconstructed and tracked over time from a vision system. The main application is to track dense geometry deformations of a human subject over time so that they can be played back under novel view and animation conditions.

It is assumed there are only a few cameras (with known pose calibration) observing the scene, so it is desirable to take advantage of as much temporal information as possible during the reconstruction. As a simpler starting point for this problem, I felt it appropriate to work on the reconstruction of dense geometry for a moving human head.

Many existing tracking methods start with some known dense geometry, or assume that the geometry can be reconstructed at each time instant. In our case, neither of these assumptions hold, the latter because we have only a few cameras. The general problem is therefore to reconstruct a dense model, and its deformations over time.

A part of the problem is to reconstruct the motion of the rigid parts. This can be done using all available cues, e.g., texture and stereo. And it too can be accomplished with different representations (e.g., a set of points, a mesh, or level-sets). In these notes, I considered this problem alone: given an approximate surface representation for a non-rigid object, find the transformations of the surface over time that best agree with the input images. Keep in mind, however, that these ideas are motivated by the bigger problem, where we would like to refine the surface over time.

In this example, intensity color difference (SSD) tracking was used to track a skinned model observed from several camera views. The implementation is relatively slow (roughly 1 frame a second), but I have also implemented a GPU version that is almost 7 fps (for 12000 points and two bones, one 6.dof base/shoulders and a 3 dof neck, with 4 cameras).

There is a pdf document with more details posted as well.

Some videos are given below.

An example input sequence. The video was captured from 4 synchronized and calibrated cameras positioned around my head.

If we just run a standard stereo algorithm, we will get some noisy results. Ideally we would like to track the head over time and integrate this sort of stereo information in the coordinate frame of the head. This part is hard as the surface is open.

Tracking the head alone would allow the registration of these stereo results in a common coordinate frame, where temporal coherency makes more sense. The video below demonstrates this idea, although I haven’t found a good solution for merging the stereo data.

| Tracked head | Depth registered in coordinate frame of head |

The following videos are more related to the tracking using a skinned mesh and an SSD score. In the following videos the tracked result on the above input sequence using a mesh generated from the first frame is illustrated below. The mesh has two bones: one 6 D.O.F for the shoulders and another for the 3 D.O.F. neck/head.

The tracking currently ignores visibility; this can cause problems when many of the points are not visible. An example of this mode of failure is given in the following sequence. This sequence was captured under similar conditions, although the left-right motion is more dramatic (more vertices are occluded). Additionally, this sequence has some non-rigid motion.

| Input sequence | noisy depth from stereo |

| Tracked head and shoulders. Notice the failure when the head is turned too far to the left. | |

These videos, and the original avi’s are available in the following two directories:

seq0-movies and seq1-movies. You will need vlc, or mplayer, ffmpeg, (maybe divx will work) to view the avi’s.

Code for Rodrigues’ derivatives: roddie. Included as I merged this code into my articulated body joint.

Color Tracker

This last friday: I was reading a paper that integrated time of flight sensors with stereo cameras for real-time human tracking and I came across some old papers for color skin tracking. I couldn’t help myself from trying out an implementation of the tracker: color_tracker and color_tracker2

It is really simple, but works pretty good for fast motions.

Another reference was on boosting for detection of faces in images. I was tempted to try and implement a version. It’s a good thing it was closer to the end of a Friday, so I didn’t start…which is probably a good thing as I have little use for such a beast.

Vids will probably only play if you have VLC or Divx installed (encoded with ffmpeg and the usual incompatibility problems)